In the digital ecosystem, a server is only as stable as its traffic. Uncontrolled traffic can quickly overwhelm a server, leading to performance degradation, crashes, and a poor user experience.

While you might expect traffic spikes from legitimate events like a marketing campaign or a new product launch, a sudden surge can also be a malicious attack, such as a Distributed Denial of Service (DDoS) or simply a misbehaving bot. The key to maintaining server health and ensuring a smooth user experience lies in proactive traffic management. This is where rate limiting comes in.

This blog serves as a comprehensive guide on implementing rate limiting with Nginx, a crucial technique for managing server traffic, preventing overload, and optimizing performance. It’s aimed at system administrators, DevOps engineers, and web developers who are responsible for maintaining a stable and performant web environment. By mastering these techniques, you can transform Nginx into a powerful guardian for your web services.

What is rate limiting?

At its core, rate limiting is a network management strategy used to control the number of requests a client can make to a server within a specific time frame. It’s a mechanism that sets a cap on the frequency of a particular action, such as a user’s API calls or a bot’s scraping activity.

This is often likened to the "leaky bucket algorithm," where requests are like water pouring into a bucket. The bucket can only leak at a fixed rate (your limit), and any excess water that overflows is either rejected or delayed. By setting these limits, you prevent any single user or automated process from consuming all of the server’s resources, ensuring fair access for everyone.

Why should we use rate limiting?

Implementing a rate-limiting strategy is not just about protection; it's a foundational element of a robust and scalable web architecture.

- Prevents Server Overload: The most critical reason for rate limiting is to protect your servers from excessive traffic that can cause them to crash or become unresponsive. It acts as a shield against brute-force attacks, DDoS attempts, and sudden, unexpected traffic spikes.

- Reduces Bandwidth Costs: Uncontrolled requests, especially from bots and scripts, can lead to unnecessary data usage and increased bandwidth costs. Rate limiting helps control this, ensuring that your infrastructure costs remain optimized.

- Improves User Experience: By ensuring fair access, rate limiting prevents a few "heavy" users from consuming resources and slowing down the experience for everyone else. It guarantees that legitimate users can access your services consistently.

- Throttles API Usage: For services with public APIs, rate limiting is essential for enforcing usage limits based on subscription plans or user tiers. A free-tier user might be limited to 100 requests per minute, while a premium user can have 1,000. This is a critical component of monetizing and managing API services.

Common use cases

Rate limiting is a versatile tool used across many industries to maintain system health and fairness. Here are some of its most common applications.

Public APIs

Public-facing APIs (Application Programming Interfaces) are often the first place people encounter rate limiting. Developers building applications on top of these APIs need predictable performance, and the API provider needs to control its resource usage.

By setting limits, a provider can offer different service levels. For instance, a free tier might have a modest request limit, while a paid or enterprise tier offers a much higher limit. This model helps monetize the API and ensures that a single free user can't degrade performance for everyone.

Login systems

Login pages are a prime target for malicious actors attempting to guess passwords. This is known as a brute-force attack. Without rate limiting, an attacker could try thousands of password combinations per second.

By implementing a rate limit (e.g., allowing only 5 failed login attempts per minute from a single IP address), you can dramatically slow down these attacks. This gives your security systems time to detect and block the malicious activity, protecting user accounts.

Web scraping protection

Many websites have valuable data that attackers might want to "scrape" or download automatically. This could be anything from product prices on an e-commerce site to articles on a news blog. Automated bots can make an enormous number of requests in a short time, putting a heavy load on the server.

Rate limiting helps detect this abnormal behavior and blocks the bot's IP address, protecting the site's content and ensuring that human users have a smooth experience.

E-commerce and ticketing sites

High-traffic events like flash sales, new product drops, or concert ticket releases can bring a massive, sudden surge of traffic. Without proper controls, the server can become overwhelmed, leading to slow loading times or crashes.

Rate limiting helps manage this demand. It ensures that everyone has a fair chance by preventing a small number of users, often using automated scripts, from making an excessive number of requests and buying up all the inventory.

Social media platforms

Social media sites use rate limiting to combat spam and abuse. For example, a user might be limited to how many posts they can make, how many direct messages they can send, or how many accounts they can follow in a single hour. This not only prevents spammers from flooding the network but also helps maintain the quality and integrity of the platform by encouraging more natural, human-like behavior.

How can we use rate limiting with Nginx?

Nginx is a highly popular and efficient web server that provides a powerful and flexible module for rate limiting. Implementing it is a two-step process.

Define a rate limit zone.

First, you need to define a shared memory zone where Nginx will store the state of requests. This zone tracks how many requests have been made from a particular source (like an IP address) and how quickly. This is done using the limit_req_zone directive, typically placed in the http block of your Nginx configuration.

http {

limit_req_zone $binary_remote_addr zone=limit_zone:10m rate=10r/s;

# ... other configurations

}Apply rate limiting

Next, you apply the rate limit to a specific location or server block using the limit_req directive.

server {

location /login/ {

limit_req zone=limit_zone burst=20 nodelay;

# ... other configurations for the login endpoint

}

}Breakdown of Nginx configuration rate limiting parameters

To effectively configure rate limiting, it's crucial to understand what each parameter does.

1. limit_req_zone Directive

- $binary_remote_addr: This is the most common variable for rate limiting, as it uniquely identifies the client's IP address. This ensures that the rate limit is applied on a per-IP basis. You can also use $request_uri to limit requests per specific URL, or $server_name to limit requests per virtual host. $binary_remote_addr is used instead of $remote_addr because it's more memory-efficient.

- zone=limit_zone:10m: This defines a shared memory zone named limit_zone with a size of 10 megabytes. This memory is used to store the state of each IP address, such as the timestamp of their last request. A 10MB zone can hold information for approximately 160,000 IP addresses, making it scalable for most use cases.

- rate=10r/s: This sets the request rate limit. In this example, it's set to 10 requests per second. Nginx tracks this at millisecond granularity, so this is equivalent to one request every 100ms. You can also set a rate per minute, for example, rate=300r/m (5 requests per second).

2. limit_req Directive

- zone=limit_zone: This links the limit_req directive to the shared memory zone you defined earlier, applying its rules.

- burst=20: This is a crucial parameter that allows for a temporary "burst" of requests that exceed the defined rate. It defines a queue size. In this case, it permits a client to make up to 20 extra requests above the rate limit. This is essential for handling legitimate traffic that might arrive in a sudden burst, like a user loading a single web page that triggers multiple API calls.

- nodelay: By default, Nginx queues up requests that exceed the rate limit and processes them slowly. The nodelay parameter changes this behavior, immediately rejecting any request that exceeds the burst limit. This is often preferred for high-traffic APIs to prevent request queueing from causing a cascade of timeouts and ensuring a more responsive system.

Additional considerations for effective rate limiting

Setting custom rate limits for different endpoints

Not all parts of your website or application are equally sensitive to high traffic. You can apply different rate limits to different endpoints to tailor your protection.

location /api/v1/public/ {

# A more lenient limit for public endpoints

limit_req zone=public_api_limit burst=50 nodelay;

}

location /api/v1/admin/ {

# A tighter limit for sensitive admin pages

limit_req zone=admin_api_limit burst=5 nodelay;

}

location /login/ {

# A very strict limit to prevent brute-force attacks

limit_req zone=login_limit burst=5 nodelay;

}Monitoring and logging rate limiting activity

To understand the effectiveness of your rate-limiting strategy and to identify potential threats, it’s vital to monitor and log rate-limited requests. Nginx configuration can log requests with specific formats, providing valuable insights.

log_format rate_limit '$remote_addr - $status $request_time "rate_limit_status:$limit_req_status"';

access_log /var/log/nginx/rate_limit.log rate_limit;

The $limit_req_status variable is particularly useful, as it logs whether a request was PASSED, DELAYED, or REJECTED, giving you granular insight into your rate-limiting policy's real-world behavior.

Best practices for rate limiting with Nginx

- Choose the right rate limits: The sweet spot is a balance between protecting your server and not overly restricting legitimate users. Start with a conservative limit and adjust as you monitor your traffic.

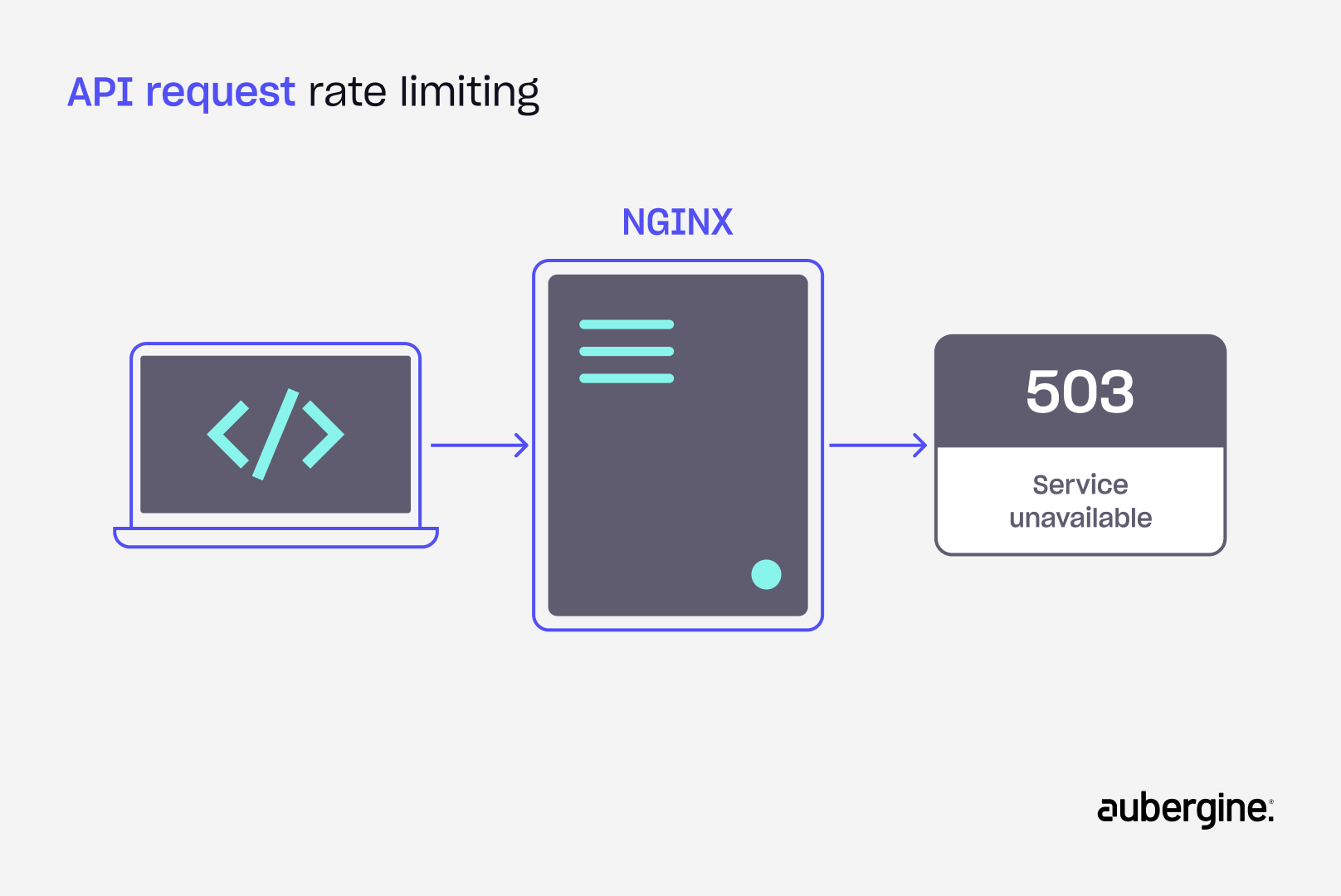

- Graceful error handling: By default, Nginx returns 503 (Service Temporarily Unavailable) when a request exceeds the rate limit. Using the limit_req_status directive, this can be changed—most modern APIs return 429 (Too Many Requests) instead. Ensure your application and front-end handle this response gracefully and display a clear, user-friendly message.

- Avoid over-limiting: Be careful not to create a poor user experience by imposing overly strict limits. Use the burst parameter wisely to allow for natural traffic spikes.

- Consider edge cases: Plan for users on dynamic IP addresses or potential abuse using proxies. For high-security endpoints, you might consider a combination of rate limiting by IP, cookie, or an API key.

Conclusion

Implementing Nginx rate limiting is a simple yet powerful step towards a more stable, secure, and cost-effective server environment. By controlling the flow of traffic, you can prevent server overload, reduce bandwidth costs, and ensure a better user experience for everyone.

Rate limiting should be a core component of your overall server performance and security strategy. It’s a proactive measure that not only protects your infrastructure from abuse but also ensures that your services remain reliable and performant as your business grows.

Aubergine Solutions is dedicated to building robust and resilient digital solutions, and we understand that a strong foundation in web infrastructure is the key to long-term success.

Partner with us to implement powerful traffic management strategies and secure your web services against unexpected challenges.

.webp)