The July 2025 EU Web Accessibility Directive has created a seismic shift in how technology companies approach inclusive design. Organisations are realising that accessibility is a competitive advantage, and this blog explores how artificial intelligence can fundamentally reimagine how we create accessible digital experiences.

As someone who has spent years advocating for accessible design principles, I've witnessed firsthand how AI is moving us beyond traditional accommodation models toward truly adaptive, personalized experiences that respond to human needs in real time.

The convergence of AI and accessibility represents more than technological advancement; it embodies a paradigm shift from reactive compliance to proactive inclusion. Instead of retrofitting accessibility features after the fact, we're entering an era where intelligence can anticipate, adapt, and evolve alongside users across the entire spectrum of human ability and preference.

AI accessibility trends shaping product design in 2025

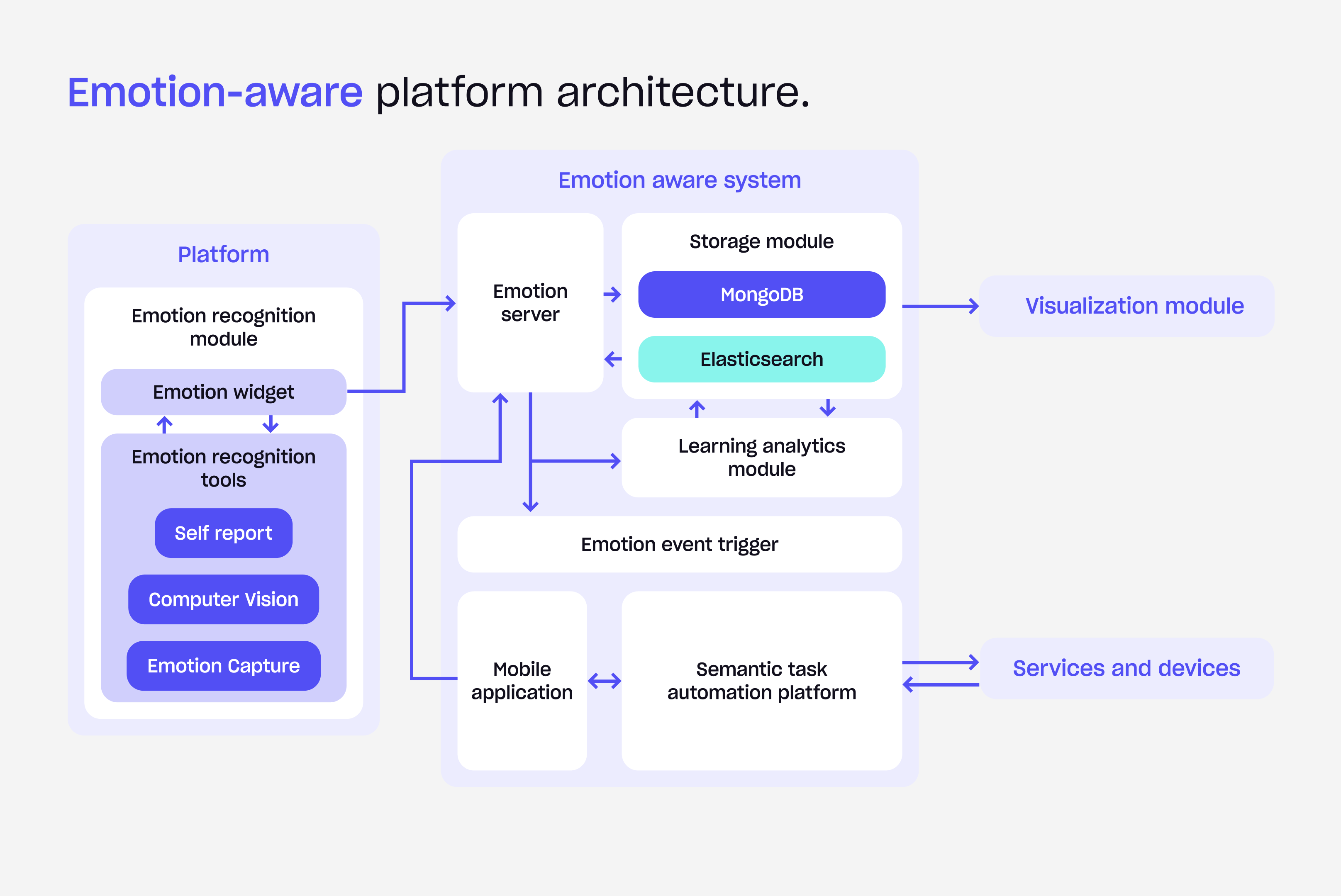

Emotion-aware interfaces in AI-powered accessibility

One of the most profound developments in AI-powered accessibility lies in emotion-aware interfaces that can read and respond to user emotional states. These systems combine facial expression analysis through camera sensors, voice tone sentiment analysis, and behavioral signal detection to create truly empathetic digital experiences.

Consider the subtle ways users communicate distress or confusion through their interactions. Rage clicks, extended hesitation periods, and facial expressions all provide valuable insights into user experience quality. AI systems can now detect these patterns and respond with appropriate interventions, whether that's simplifying navigation paths, offering alternative interaction methods, or providing contextual support.

Research demonstrates the tangible impact of this approach. A comprehensive study on emotion-adaptive AI tutoring systems revealed a 25% increase in student engagement compared to static learning environments. This improvement becomes particularly significant for neurodiverse learners, users experiencing high anxiety, or those dealing with cognitive overload situations.

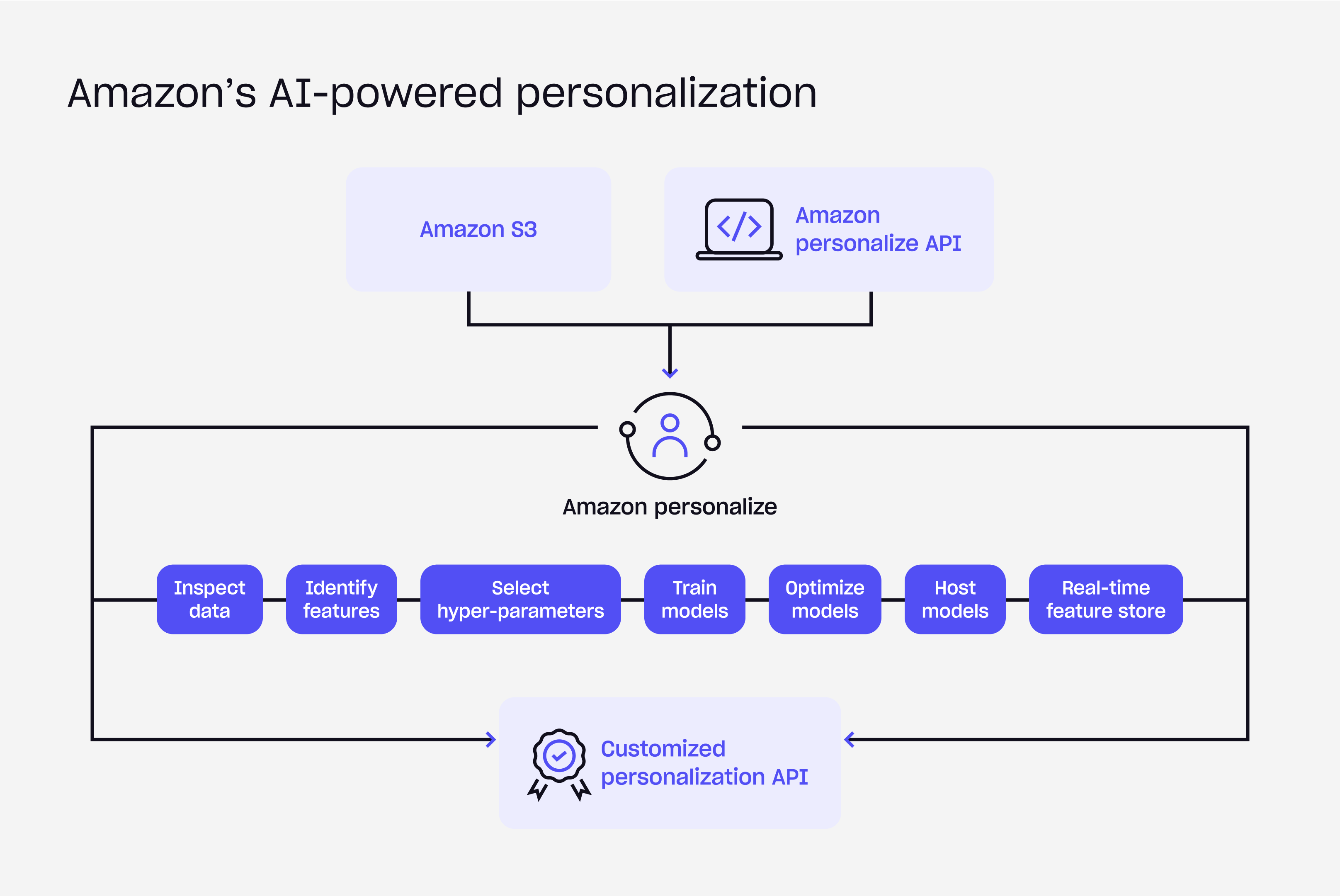

Real-time accessibility personalization with AI

Perhaps the most immediately practical application of AI in accessibility involves real-time UI personalization. These systems create dynamic user profiles based on preferences, device capabilities, and interaction patterns, then make instantaneous adjustments to CSS and DOM elements to optimize individual user experiences.

The power of this approach becomes evident in e-commerce environments. Amazon's implementation of adaptive product listings demonstrates how AI can automatically offer large-print modes for users with low vision while simultaneously adjusting navigation complexity based on individual cognitive profiles. Users with cognitive challenges receive simplified menu structures, while power users maintain access to comprehensive filtering options.

The operational benefits extend beyond user experience improvements. Real-time personalization has shown remarkable results in increasing user engagement by up to 25%.

What makes this particularly exciting is the elimination of manual configuration requirements. Users no longer need to navigate complex accessibility settings or remember to adjust their preferences across different devices and platforms.

The rise of multimodal user experiences

The evolution toward multimodal UX represents a fundamental shift in how we think about human-computer interaction. Modern AI systems can simultaneously process voice commands, gesture recognition, eye-tracking data, and haptic feedback to create more flexible and inclusive interaction paradigms.

Banking applications exemplify this multimodal approach beautifully. Users can now deposit checks using camera functionality with voice verification, navigate through complex menu systems using gesture controls, and receive confirmation feedback through haptic responses. This combination of interaction methods accommodates users with various physical limitations while creating more intuitive experiences for all users.

A prime example of this innovation is Citi’s AVIOS (Audio-Visual Interactive Operating System), a multimodal banking interface that combines voice recognition, facial recognition, and touch inputs. This system enables users to navigate banking services through spoken commands, facial authentication, and touch interactions, accommodating a wide range of user preferences and needs.

How AI is transforming digital accessibility - Top 5 trends

1. AI in neuroadaptive UX for accessibility

Educational platforms are pioneering neuroadaptive interfaces that measure user cognitive load through wearable devices, such as EEG headbands. These systems adjust content difficulty and layout complexity in real-time, creating truly personalized learning environments that respond to individual cognitive states.

The technology operates through real-time biosignal analysis, dynamic content chunking based on cognitive load measurements, and adaptive complexity adjustment. When cognitive overload is detected, the system automatically reduces information density, provides additional scaffolding, or suggests break periods.

An example of this technology is provided by Emotiv's EEG headsets, which allow for personal development and wellness through real-time brainwave monitoring. These EEG devices can track mental states like focus, relaxation, and cognitive overload, allowing for a more responsive and adaptive learning experience that adjusts to the learner's needs and states.

In the near future, these systems may combine data from multiple biosensors, including heart rate variability, eye tracking, and galvanic skin response to form a richer, multidimensional understanding of a learner’s cognitive and emotional states. Advanced AI algorithms could predict when a student is about to become disengaged or frustrated, proactively adjusting not just content, but the very modality of delivery switching between text, video, or interactive simulations as needed.

2. Predictive accessibility, aka “accessibility forecasting”

Workplace platforms are developing sophisticated behavioral analysis capabilities that forecast upcoming accessibility needs before users explicitly request accommodations. These systems analyze interaction patterns, pause lengths, scroll speeds, and historical support requests to predict when alternative content formats would be beneficial.

The technology works by analyzing detailed interaction logs, tracking user behavior patterns over time, and correlating these patterns with successful accessibility interventions. When the system detects patterns suggesting user fatigue during extended reading sessions, it can proactively offer audio narration options.

Implementation of predictive accessibility has shown remarkable results in reducing accessibility-related frustrations by 30%. This proactive approach ensures smoother, more responsive user experiences by anticipating needs rather than waiting for users to identify and request specific accommodations.

In the near future, emerging platforms may utilize multimodal data, including facial expression analysis and voice stress detection, to refine their forecasts and provide even more nuanced support. Instead of simply suggesting alternative formats, future systems might automatically switch content modes in real time, seamlessly transitioning from text to audio or simplified visuals as a user’s cognitive or physical state changes.

3. AI wearables will lead the next wave of accessible technology

The accessibility landscape is experiencing a democratization moment through the introduction of affordable AI wearables. Meta's Ray-Ban smart glasses, priced under $300, represent a significant step toward mainstream accessible technology. With emotion recognition and scene analysis capabilities improving rapidly, accessibility tools are transitioning from specialized equipment to everyday consumer products.

The October 2024 launch of Be My Eyes integration with Ray-Ban Meta glasses demonstrates practical implementation. This integration allows blind users to connect with sighted volunteers through simple voice commands, creating seamless, hands-free accessibility tools that integrate naturally into daily life.

By 2035, AI wearables will provide enhanced emotional support through real-time analysis of user emotional states, predictive assistance based on biosignals and behavior patterns, and significantly greater independence for people with disabilities. These devices will offer seamless interactions with the environment, while universal integration will embed accessibility features into everyday products, creating inclusive environments that benefit everyone.

4. AI-driven accessibility compliance automation

Design tools are incorporating automatic accessibility scanning capabilities that analyze mockups for WCAG 2.2 violations, generate contextually appropriate alt text, and preview screen-reader navigation flows directly within design environments.

These systems provide comprehensive accessibility support, including color-contrast remediation to ensure visual accessibility standards, auto-alt-text generation through computer vision analysis, and live code snippets demonstrating proper ARIA attribute implementation for compliance requirements.

The return on investment is substantial. Automated compliance checking reduces manual audit time by 70%, significantly speeding up design iterations and release cycles. By integrating accessibility validation into the design process, teams can ensure compliance standards are met from the initial design phase rather than discovering issues during final audits.

As governments and organizations adopt stricter accessibility mandates worldwide, design tools will likely offer fully integrated, real-time compliance monitoring as a default capability, eliminating the need for separate accessibility audits. Future systems may automatically adapt design elements to meet diverse accessibility needs based on user demographics or project location, ensuring compliance with local and international standards from the outset.

5. Inclusive AI agents as accessibility advocates

Museums and cultural institutions are pioneering inclusive AI agents that serve as accessibility advocates for visitors with disabilities. These on-site AI assistants proactively guide visually impaired visitors through exhibits using voice directions and smart haptic pathways, creating enhanced engagement opportunities with cultural content.

The RE-Bokeh system, implemented at the Houston Museum of Natural Science, demonstrates the practical impact of this approach. This system restored usable vision for over 85% of low-vision visitors using real-time filtering technology, dramatically improving their ability to engage with exhibits independently.

These AI agents operate through multimodal AI systems that combine computer vision and speech processing with contextual Natural Language Processing for domain-specific guidance. They provide museum-specific information and exhibit details tailored to the individual needs and preferences of each visitor.

In the near future, inclusive AI agents will extend far beyond museums, appearing in schools, hospitals, transit, and virtual spaces. These systems could offer real-time translation, adaptive navigation, and personalized guidance for diverse needs. As adoption spreads, environments will become more intuitively accessible and responsive. Ultimately, every space (physical and digital) will proactively support inclusion for all users.

Ethical AI and data privacy in accessibility

The advancement of AI-powered accessibility presents significant challenges in balancing the benefits of personalization with the need for privacy protection. As AI technologies gather increasingly detailed personal and behavioral data to improve accessibility features, maintaining user privacy becomes more complex.

Best practices in this evolving field emphasize consent-based data collection, ensuring that users explicitly consent to the gathering of personal and behavioral data. Transparent AI decision explanations provide clear information about how AI decisions are made, particularly when these decisions impact accessibility features and user experience.

Standards alignment remains crucial, with systems designed to comply with GDPR, ADA, and emerging AI ethics frameworks. This ensures compliance with existing privacy laws and accessibility standards while integrating ethical guidelines for AI development and deployment in accessibility contexts.

Conclusion

The convergence of AI and accessibility represents more than technological advancement; it embodies a fundamental shift toward creating digital experiences that respond to human diversity as a source of innovation rather than a challenge to overcome. The future belongs to products that can anticipate, adapt, and evolve alongside users across the entire spectrum of human ability and preference.

This transformation is already underway. Organizations that adopt AI-powered accessibility today will lead the next generation of inclusive digital experiences, meeting both compliance requirements and user expectations for intelligent, adaptive interfaces.

Ready to future-proof your product with AI-powered accessibility? Let’s explore how adaptive interfaces, real-time personalization, and compliance automation can make your digital experience more inclusive. Contact us to schedule a consultation or accessibility audit with our IAAP-certified accessibility experts.

.webp)

.webp)