Your voice, amplified by intelligence.

Live conversations

Automate workflows

Qualify & engage

Voice that works smarter for your business.

Tech stack

Built for teams that run on conversations.

Enterprise-ready

Easy to adopt

Secure and compliant

FAQs

How many languages does the voice agent support?

The agent can be configured to support multiple languages. Setting up the right model for a specific language typically takes 2–3 hours.

How accurate is the voice agent?

Accuracy depends on the model used and the complexity of queries. With advanced LLMs like GPT-4.0, the system achieves highly reliable responses and can be fine-tuned with domain-specific data for even greater accuracy.

Will multilingual support affect performance?

Running multiple languages (e.g., English and Hindi together) may introduce slightly higher latency, but the system is optimized to minimize delays in real-time interactions.

Can the agent analyze tone or sentiment?

The agent does not perform tone analysis out of the box, but sentiment analysis and tone detection can be added as needed for specific use cases.

Which speech models does the agent use?

The system works with models comparable to Google or Microsoft voice engines and can switch between them based on requirements.

How does the voice agent enable real-time conversations?

The agent uses WebRTC frameworks like LiveKit for seamless real-time communication. A custom WebRTC infrastructure can also be implemented, though it may take more setup time.

Can the system handle voice-to-voice conversations?

Yes. The agent supports voice-to-voice communication and can generate responses in natural, human-like voices.

Is my voice data stored? How secure is the system?

By default, no recordings or voice information are stored. However, data storage can be enabled if your use case requires it.

Strong guardrails ensure that only safe, relevant, and compliant information is shared. Data and prompts are managed securely with enterprise-grade safeguards.

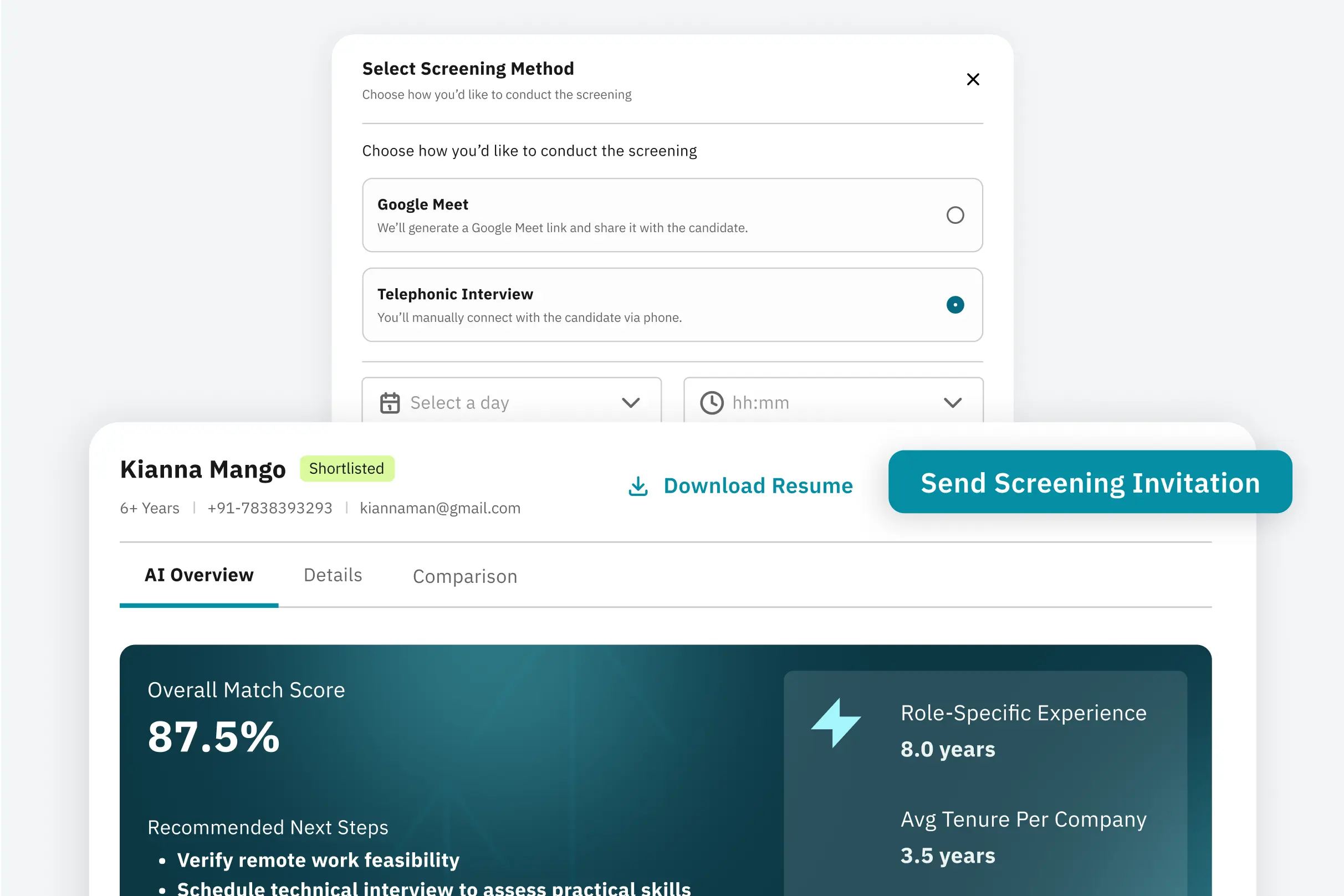

Does the agent support speaker recognition?

Speaker recognition is not fully supported yet, but the system can be extended to handle it. Voice changes and voice cloning are also possible.

Does the voice agent work over phone calls?

Absolutely. The same stack supports standard telephony, enabling the AI to handle live calls as well as web-based voice interactions.

Can the agent analyze both voice and text inputs?

Yes. The system includes analyzers that can process both spoken voice inputs and text-based inputs, ensuring consistent and intelligent responses across channels.