Everyone is talking about AI agents. Autonomous systems with the ability to make decisions and perform complex tasks without constant human input. They're poised to deliver up to $450 billion in economic value by 2028 through revenue gains and cost savings. That's a massive number, and it represents a huge opportunity for companies willing to get it right.

So why are most organizations still struggling to scale?

The answer isn't a technical one. The core conflict is a paradox: while the potential of fully autonomous AI is growing, trust in AI agents is dropping. The technology is advancing faster than our ability to integrate it responsibly. This is the central challenge, and if you're a leader thinking about your AI strategy, it's the most critical problem to solve.

The true value of agentic AI adoption isn't found in full autonomy. It's in a new model of human-AI collaboration, where trust, transparency, and strategic oversight are the real drivers of success.

The real conflict lies in the tension between autonomy and oversight. We've been sold a vision of AI as a hands-off, "set it and forget it" solution. But the data shows us that this black-box approach is failing. It’s creating a gap between the promise of efficiency and the reality of implementation.

The issue of trust in AI agents

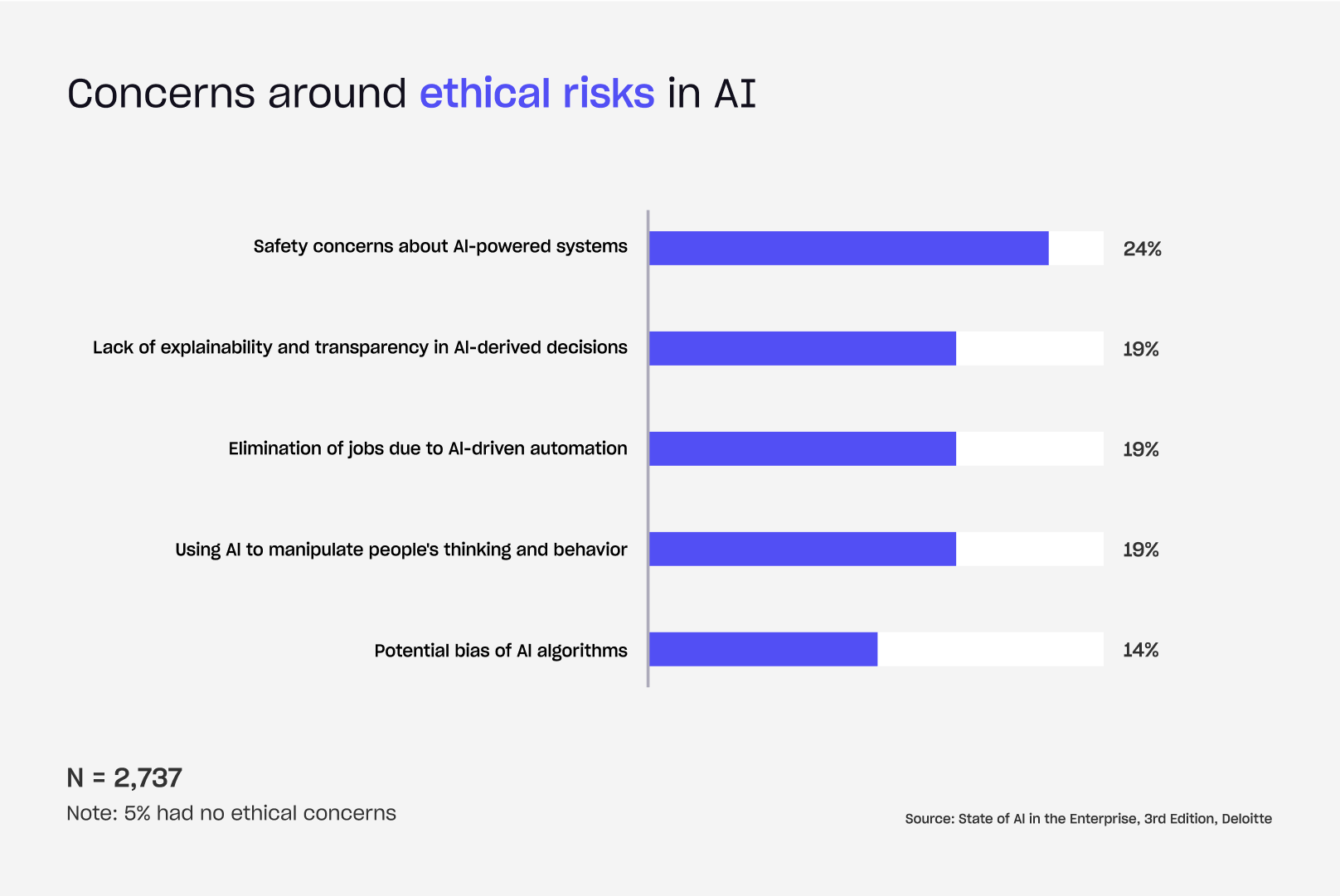

The data paints a sobering picture: trust in ethical AI has plummeted from 43% to just 27%. This isn't merely a matter of public perception but a fundamental business challenge. When trust erodes, AI adoption stalls, and the path to scaling becomes littered with skepticism and resistance.

While the drop in trust speaks to a broader erosion of trust, the data behind this decline reveals where people’s anxieties are most concentrated. When asked to pinpoint the single most significant ethical AI risk, respondents overwhelmingly cited safety concerns, followed closely by issues like AI transparency, job displacement, manipulation, and bias. These concerns are not abstract and directly influence whether individuals and organizations feel comfortable adopting AI systems at scale.

We're also facing an "explainability" crisis; if an AI agent makes a decision that impacts a customer or an employee, can we trace its logic? Can we explain the "why"? When the answer is no, trust evaporates. The ethical AI implications are no longer theoretical. Concerns around inherent bias in training data, algorithmic fairness, and accountability are now central to the conversation.

These concerns are shaping executive decisions, and studies reveal stark findings. For example, two in five executives now believe the risks associated with AI outweigh the benefits. This is a significant roadblock in the market, and tells us that product leaders are no longer just looking at the potential upside.

They're carefully weighing the downside risks of reputation damage, legal exposure, and failed implementations. To move forward, we can't simply ignore these fears. We have to address them head-on with a new approach.

The power of human-AI collaboration

The future of work is not a zero-sum game between humans and AI. It's a symbiotic relationship where each partner brings unique, irreplaceable strengths to the table. We call this "Human-Agent Chemistry," and it’s the engine that drives true innovation and scalable growth.

AI excels at processing vast data sets, identifying patterns, and executing repetitive tasks with relentless speed and precision. But it lacks the nuance, creativity, and ethical reasoning that are the hallmarks of human intelligence.

When these two capabilities are deliberately combined, the results are powerful and measurable. Our analysis of effective human-AI collaboration reveals a clear trend for success:

- A 65% increase in human engagement with high-value, strategic tasks.

- A 53% rise in creativity, as AI acts as a brainstorming partner and frees up our teams to explore bigger, more ambitious ideas.

- A 49% boost in employee satisfaction, because people are empowered to do more meaningful, impactful work instead of getting bogged down in mundane activities.

Building this chemistry requires a strategic approach. It starts by moving beyond a simple "human-in-the-loop" model (a reactive safety net) to designing a proactive, collaborative environment.

We must cultivate a culture where human oversight isn't a formality but an essential, integrated part of the process. This means clearly defining roles, establishing a foundation of shared understanding, and ensuring that our teams are always in command, not just on the sidelines.

The imperative of strategic oversight

Allowing AI agents to operate with full, unchecked autonomy is a gamble no business should take. It's the difference between a co-pilot and an unsupervised driver. The role of human oversight is to bridge AI's technical potential with your organization's core values, ethics, and long-term vision. This strategic oversight provides high-level guidance and implements robust checks and balances to ensure agent’s optimal performance.

Without the intentional and strategic human command, we risk allowing AI to operate in a black box, making unexplainable decisions that could lead to significant reputational damage and financial risk. The goal is to build a system where the AI is a trusted partner, but the human remains the ultimate decision-maker.

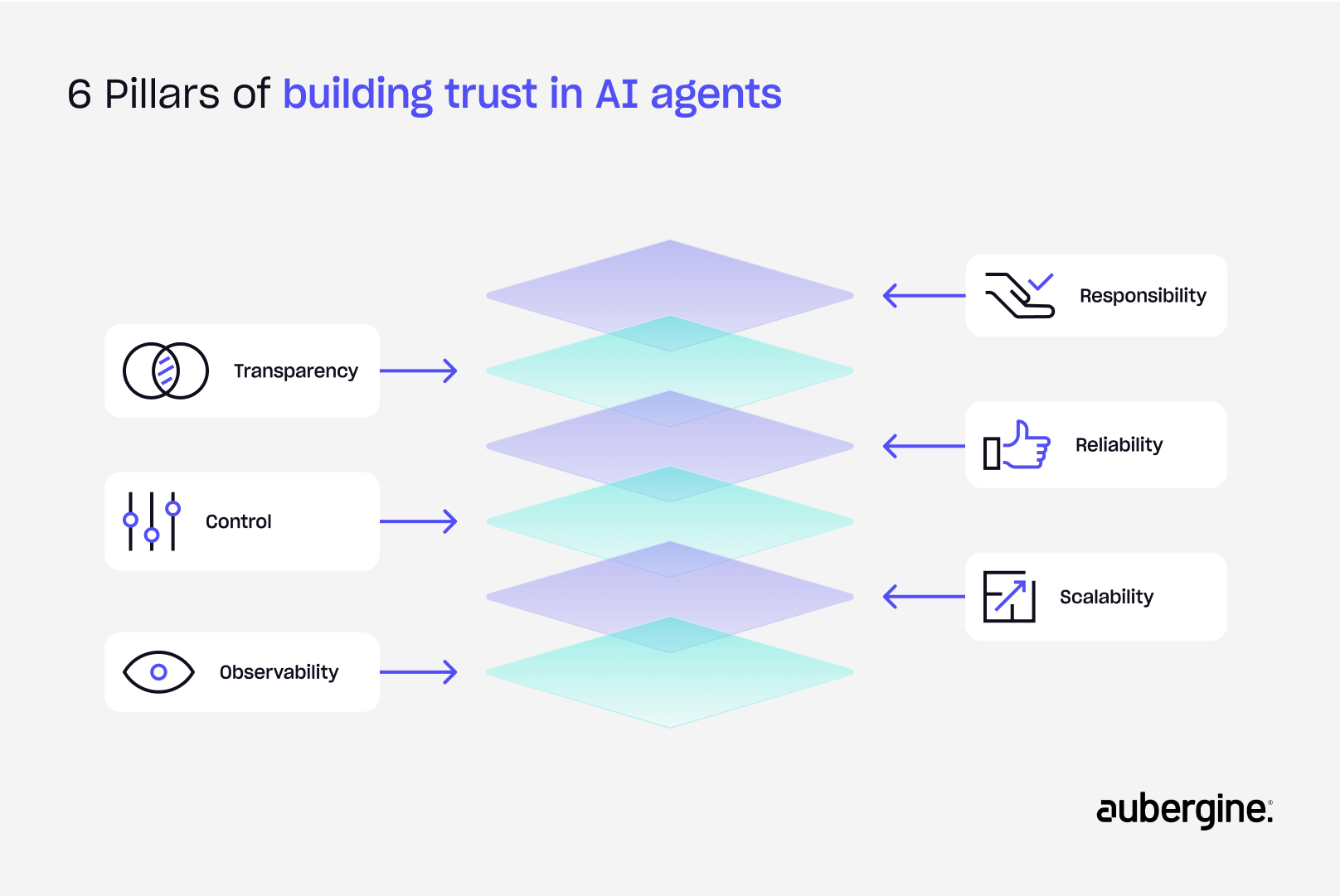

The six pillars for building trust AI agents

To build an AI agent that your users can truly trust, you must establish a strategic framework that puts human values and business integrity at the forefront. Here are the six principles we follow to build trust from day one.

AI transparency

AI transparency means building systems that don’t act like black boxes. You can’t ask people to place their trust in a system whose logic and data usage they can’t understand. This is the difference between a trusted AI agent and a magician. Your teams and customers deserve to know how a decision was reached.

We have to move past a simple output and provide a clear, understandable audit trail of the agent's reasoning. This means building in features that let users interrogate the system, allowing them to see the data points it considered and the logical steps it took. This doesn't just build confidence; it turns the AI from a mysterious oracle into a collaborative partner that can be understood and relied upon.

Building control and human-in-the-loop functionality

For an AI agent to be truly adopted, your people must feel empowered and in control. This requires building systems that provide users with direct levers to manage the AI's behavior. This isn't just a simple failsafe; it's a critical feature for quality assurance, ethical alignment, and building confidence.

You can bring this control in through several mechanisms:

- Configurable guardrails and policies: Implement customizable rules that define the boundaries of the AI's actions. For instance, you can set a policy that prevents an AI agent from making financial transactions above a certain amount or restricts it from accessing sensitive customer data without explicit human approval.

- Real-time intervention control: Users must have the ability to step in and correct the AI's actions as they happen. This could be a simple "edit" button on an AI-generated email or the ability to modify a recommendation before it is sent to a client.

- AI to human handoff workflows: Design clear workflows where the AI recognizes its limitations and seamlessly hands a task or conversation over to a human expert. This is especially crucial for complex or emotionally charged interactions where human nuance is essential.

- Escalation workflows: For high-stakes decisions, the AI should present a recommendation but automatically route the final decision to a human. This ensures that the final authority and accountability always rest with a person.

Observability: Monitoring AI agent performance

You can't manage what you can't monitor. Think of an AI agent like a critical piece of machinery; you wouldn't operate it without a dashboard. Observability is about creating that dashboard for your AI. This means implementing real-time dashboards that track the agent's performance, its workload, and its output. It also means setting up automated alerts for any unexpected behavior or performance dips.

By actively monitoring the system, you can proactively catch and correct issues before they escalate, ensuring the AI operates reliably and predictably. This level of oversight turns a reactive response into a proactive strategy, safeguarding your business from unseen errors.

Responsibility: Governance frameworks for AI oversight

For AI to be trustworthy, there must be clear accountability. This isn’t a nice-to-have; it’s a non-negotiable part of a mature AI strategy. You need a formal governance framework that establishes clear lines of authority for the AI's actions.

This might involve creating an AI ethics committee or assigning a "human owner" for each agent. An organization must take responsibility for its AI's output, and every stakeholder should understand their role in the process. When an organization publicly outlines its ethical principles and backs them up with a defined governance structure, it builds a foundation of trust that resonates with both employees and consumers, mitigating significant legal and reputational risk.

Reliability: Data quality and bias mitigation in AI agents

Trust is earned through consistent, high-quality performance. Your AI's output must be reliable, accurate, and free from bias. This starts at the source: the data. Investing in high-quality, unbiased training data is not an expense; it’s an insurance policy.

A single flaw in your data can lead to a domino effect of unreliable results and eroded trust. This principle also extends to rigorous testing, feedback loops for continuous learning, and validation process. By ensuring your AI consistently delivers on its promises, you build the credibility needed for it to become an indispensable part of your operations. When your AI is a reliable partner, it boosts productivity and efficiency across the board.

Scalability: Standardizing responsible AI deployment

Trust is difficult to earn but easy to lose, especially at scale. A fragile, non-standardized approach to AI adoption cannot grow with your company. The one-off, "custom" agent you build for one team might work today, but it will become a liability when you try to deploy 100 of them across the organization. The costs of an incorrect implementation may lead to a burn of a lot of productive hours and higher resistance and skepticism to AI adoption.

To scale responsibly and maintain trust, you need a robust framework.

- Modular architecture: Build agents with reusable components so you can easily replicate and update them without starting from scratch.

- Cloud-native infrastructure: Use cloud platforms with auto-scaling and containerization (like Kubernetes) to handle high demand reliably.

- Multi-agent orchestration: Use frameworks like LangChain or CrewAI to coordinate specialized agents, distributing tasks for better efficiency and complex workflows.

- Model and cost optimization: Deploy the right-sized models for each task and use techniques like caching and prompt optimization to manage costs as you grow.

Building a scalable approach to responsible AI is the only way to ensure trust grows with your technology, not despite it.

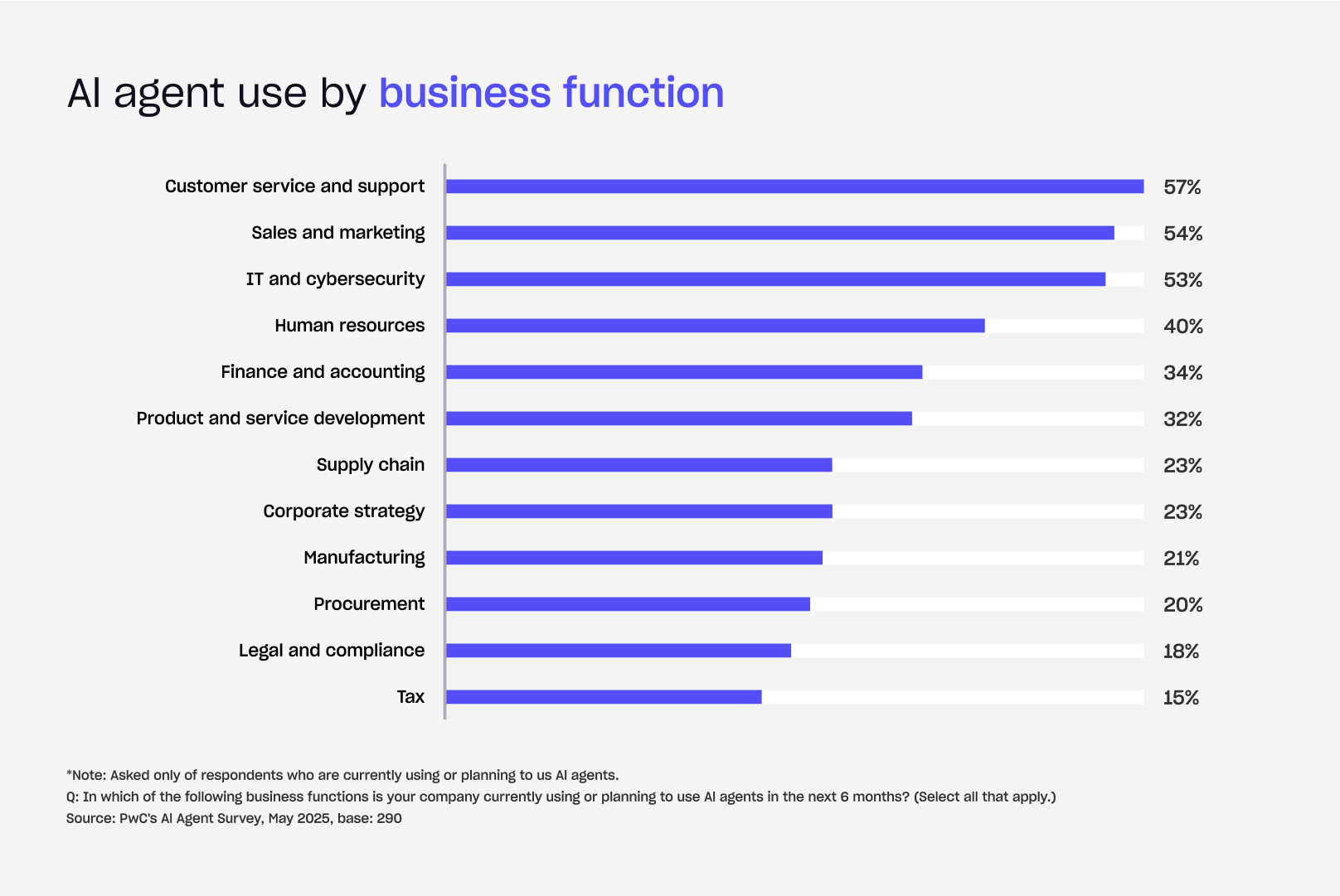

Popular use cases by business function

The principles of human-AI collaboration are already transforming industries across various use cases. We’re seeing the most significant impact in areas where high-volume, repetitive tasks create a bottleneck for high-value human work. Customer service, IT support, and sales are all proving to be fertile ground for this new model.

Consider our work with a client in the real estate business. The real estate industry is built on trust and personal relationships. Yet, brokers were spending an inordinate amount of time on manual tasks: qualifying leads, scheduling property visits, and handling initial negotiations. Our solution wasn't to replace the brokers with fully autonomous AI adoption.

Instead, we built a hybrid, multi-agent system to turn broker expertise into a scalable, always-on intelligence. AI agents handle the heavy lifting, while human brokers have complete control, able to configure the AI's autonomy or take over any conversation at any time.

The result: a 10x increase in overall realtor operations output and a 70% increase in deal-closure probability.

This strategic approach to collaboration is a common thread among the most successful early adopters of AI agents. The data confirms that the functions leading the charge like customer service, sales & marketing, and IT & cybersecurity are doing so by strategically using AI to augment, not replace, their human teams.

Overcoming barriers to scale

The most significant challenge we see is a readiness gap. Most organizations simply aren't equipped to deploy and manage AI agents at scale. Their AI infrastructure is often immature, and they lack the robust data readiness required to train and deploy agents reliably. Without a strong foundation, any attempt to scale is destined to fail.

We also have to contend with a significant ethical action gap. While most leaders are aware of the risks, few are taking concrete steps to address them. For example, while 51% of organizations are concerned about privacy , only 34% are actively working to mitigate that risk. This disconnect between concern and action is a major reason why trust is eroding and AI adoption is stalling.

To overcome these barriers and truly harness the power of AI agents, you need a strategic, proactive approach.

Strategic imperatives for success

- Focus on outcomes, not AI: The goal isn't to implement AI; it's to solve a business problem. Instead of chasing the latest technology, start by identifying a clear outcome you want to achieve. Adopt and evangelize an AI-first mindset, where you redesign core processes and business models with AI as an integral part of the solution, not a bolt-on feature.

- Build trust from day one: Trust isn't something you can add at the end of a project. It must be a core principle from the very beginning. Embed responsible AI principles, ethics, and safety into your development process. This proactive approach ensures your agents are built on a foundation of integrity and accountability.

- Reshape the organization: Successfully integrating AI agents requires more than just new tech; it demands a new culture. You must invest in upskilling employees so they can work effectively alongside AI. Create a culture that fosters human-AI collaboration, where teams see the agent as a partner, not a threat. Constant messaging around this notion is a must to ensure effective adoption.

The future of AI agents depends on human-AI collaboration

The potential of agentic AI is undeniable, with an economic value measured in hundreds of billions. But as we've seen, that value remains out of reach for most organizations due to a lack of trust and a gap in readiness. The path to scaling this technology is not through blind automation but through intentional human-AI collaboration.

To truly harness the power of AI agents, we must shift our focus. We must move beyond the hype and prioritize building trust through strategic pillars, such as AI transparency and control. We must address the readiness gap by focusing on outcomes, embedding ethics from day one, and upskilling our teams. The organizations that will win are the ones that see AI not as a replacement for human talent, but as a catalyst for it.

At Aubergine, we build AI agents that learn, adapt, and augment humans to scale business operations. Grounded in human-centered design and engineering excellence, our approach emphasizes transparency, ethical alignment, and measurable outcomes throughout the product lifecycle.

Partner with us to weave AI into your operations, build lasting customer trust, and drive measurable business growth through improved ROI, higher productivity and human+AI collaboration.

.webp)

.webp)